AURENA Tech welcomes new Senior DevOps Engineer

We are happy to welcome Andrei Kishkin to the AURENA Tech team. With more than 20 years of experience, he will be a strong addition to our DevOps division.

13.11.2024

written by Iana Stavnicha

As a QA Engineer at AURENA Tech, my role revolves around ensuring the quality and reliability of our products and auction platform, but recently, I’ve been feeling the pull of something bigger — AI.

I mean, can you blame me?

Our QA Engineers Sara and Iana at Testing United “AI Augmented QA: Challenges, Opportunities, and Lessons from the Past“, November 7 - 8 2024, Vienna

Its game-changing presence that everyone’s buzzing about, and I’ve dabbled here and there, using it mostly for fun and exploration. But this conference felt like the perfect opportunity to dig deeper and get serious about integrating AI into my work. I wanted to find lean, practical tools to boost my productivity, but I was also looking for fresh ideas and inspiration to push my QA process to a whole new level. I hoped to come away with not just tools, but a bigger vision of how AI could transform the way we work at AURENA Tech.

Here, I want to share some of my key-takeaways with you:

In today’s fast-paced tech environment, using AI to simply “get tasks done” isn’t enough—or even fair to yourself. The first time I heard of AI assistants, I thought, “That’ll just make me lazy! Do I even want to fall into that trap?” But with time, I realised the potential for AI not only to follow commands but to act as a coach, guiding you by asking insightful, probing questions that can shift your perspective and inspire your inner problem-solver.

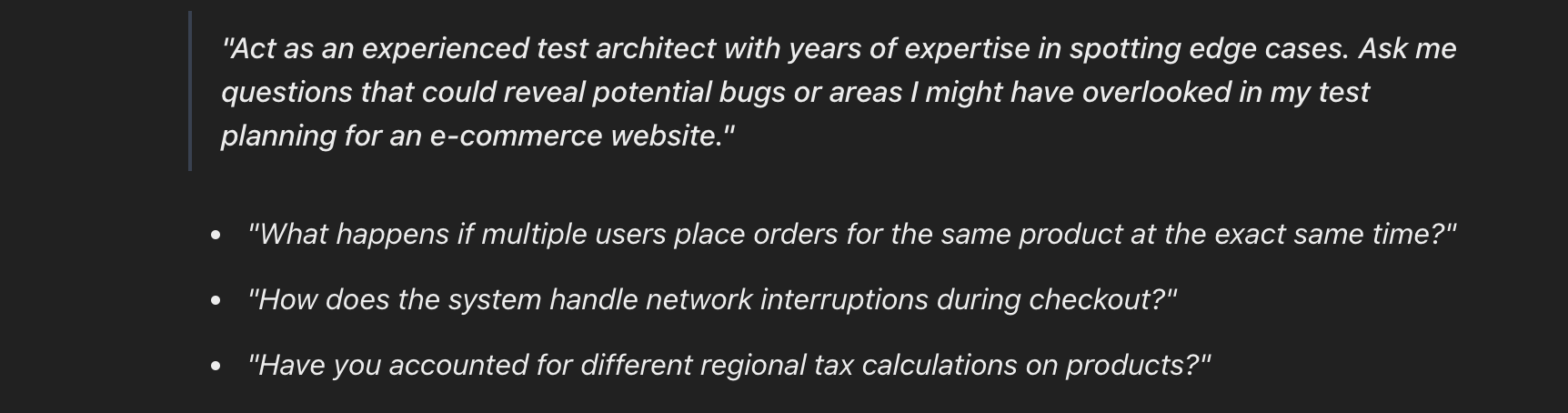

Instead of treating AI like a silent assistant, you can use prompts to give it a personality — a persona that aligns with the guidance you need. Try something like this:

“Act as an experienced test architect with years of expertise in spotting edge cases. Ask me questions that could reveal potential bugs or areas I might have overlooked in my test planning for an e-commerce website.”

Screenshot from ChatGPT

With this approach, the AI becomes an active participant in your flow, pushing you to think more broadly rather than just spitting out answers. It begins to challenge your assumptions, encouraging you to explore edge cases and flows you might otherwise miss. For developers and testers, this mentorship-style relationship with AI can be a game changer.

Get AI working with you, not just for you.

We’ve heard an interesting quote during the conference but it’s too juicy to include so I’ll rephrase: the quality of AI’s output is only as good as the input it receives.

That’s why it’s incredibly important to give AI the context it needs to deliver higher quality responses. Ask questions like, “What prompt should I use to debug this specific bug?” or “What extra context would help you catch any hidden weak points in this flow?” When you interact with AI this way, you’re not only refining its output, but you’re also minimising misunderstandings.

But even with great context, I believe, human validation is essential. AI-generated outputs might seem polished, but it’s up to us to apply that final check for context, nuance, and accuracy. No one better than us, QA engineers, will recognise that this step is simply about ensuring quality. We are making sure that used automation enhances productivity without risking blind relying.

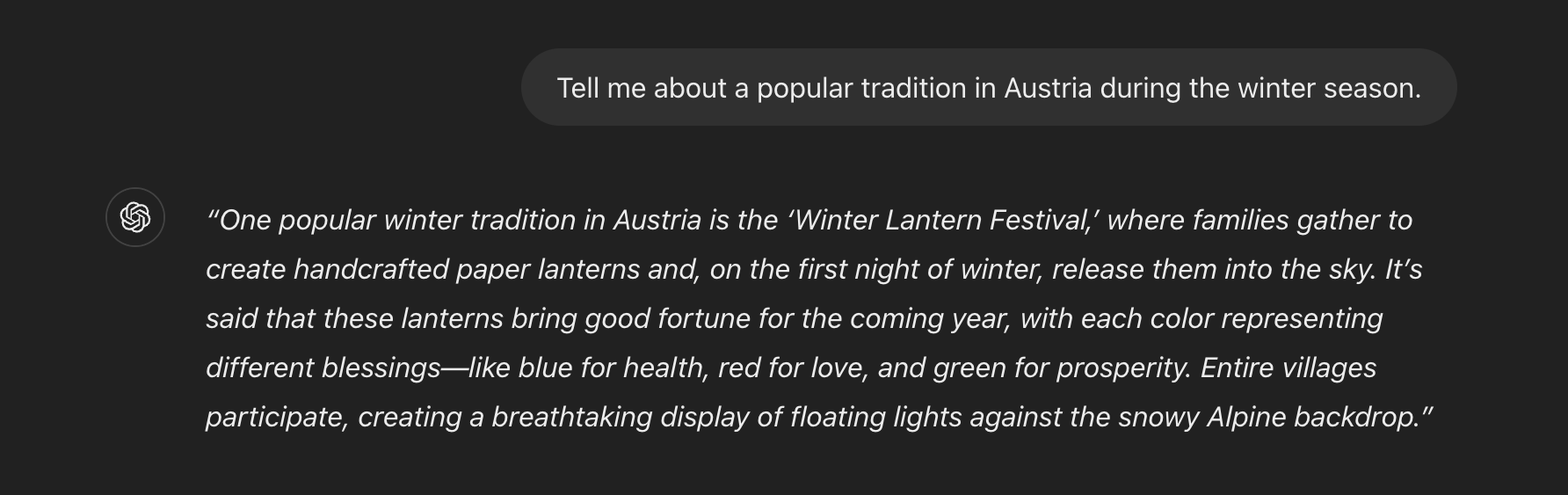

Just like any human being, AI ( almost like a real boy/girl) can hallucinate. And there are plenty examples for that:

Screenshot from ChatGPT

Spoiler alert: there’s no “Winter Lantern Festival” in Austria. While lantern festivals are popular in some Asian countries, this is a completely invented tradition. In Austria, more accurate traditions include St. Nicholas Day and Krampus parades, but trusting this answer would create confusion, especially in travel or cultural contexts.

The good list can go on and on but I only have one article space so lets make it count with some more multiple possibilities worth exploring and mentioning like:

Enhancing Diagnostics and Error Analysis

AI tools like LAM can dive into error logs, triaging issues and flagging clusters to simplify debugging. Less manual error-checking, not a traditional use for LAM, but we are here for anything but traditional approaches.

Automating Test Scenario Generation and Automation

AI can auto-generate test cases based on past bugs or requirements, covering edge cases you might miss. Additionally use Cursor IDE as your AI code editor to enhance the experience of scripting.

Augmenting Exploratory Testing

AI suggests exploratory paths based on prior issues or high-traffic features

Leveraging AI for Predictive Analytics

Use AI to predict where issues are likely to pop up, focusing resources on high-risk areas.

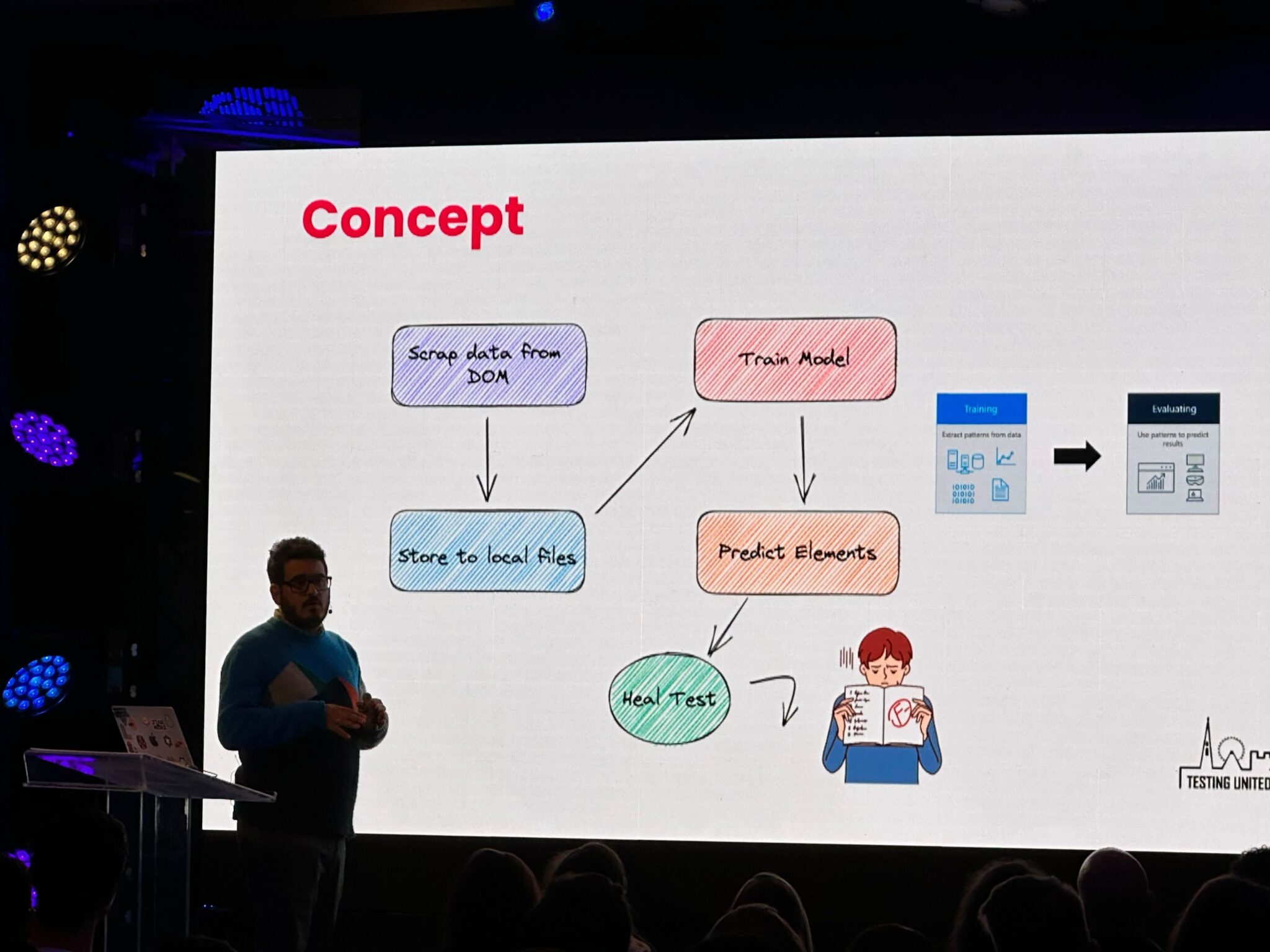

AI-driven self-healing tests

With built-in self-detection and self-correction capabilities that adapt to UI changes on the fly. By automatically updating locators and fixing minor issues, these tools minimize test flakiness and reduce maintenance overhead

Ioannis Papadakis: “Exploring Test Automation: A Comparative Analysis of Generative AI and Traditional ML Approaches”

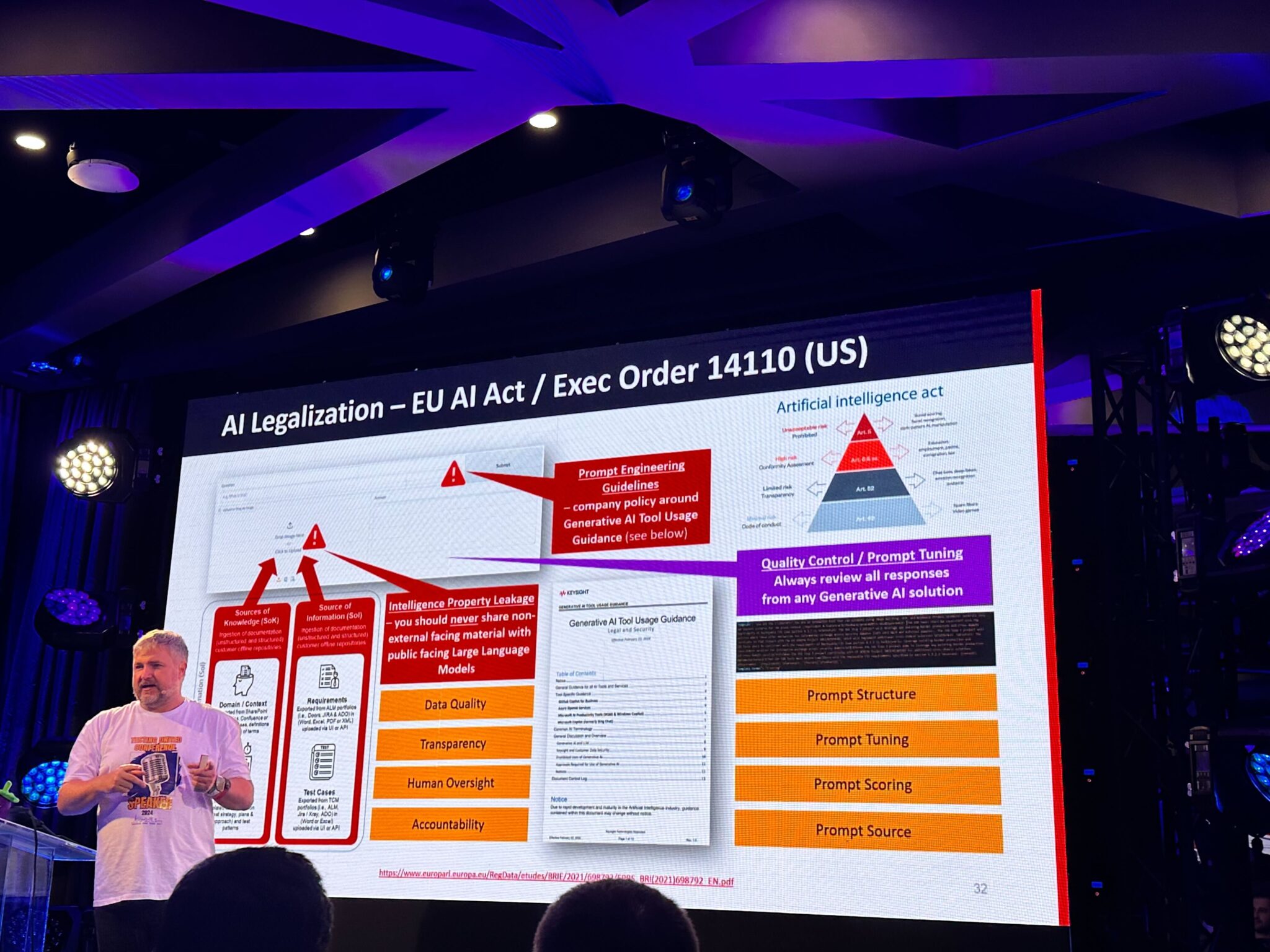

There are many much more vocal people warning us about the AI implications. Let me put my two cents to it, because I’m still an idealistic pragmatist after all. The way I see it using public AI models in company operations presents several legal implications that businesses must carefully consider. Intellectual property rights are a key concern, as using pre-trained models may infringe on proprietary technologies or data if the model’s training sources aren’t clear or permitted for commercial use. Data protection regulations, like GDPR in the EU, also impose strict requirements on data handling, which companies must observe to avoid fines or litigation. Additionally, evolving AI-specific regulations, such as the proposed EU AI Act, aim to set compliance standards around transparency, fairness, and accountability in AI systems. It’s been stated that training advanced AI models without incorporating copyrighted materials is currently unfeasible. Public AI model companies indicate that a significant portion of their training data includes copyrighted content, though the exact percentage remains undisclosed.

It’s not all roses and sunshine—you’ll find plenty of people who oppose AI much more than I can explain here, citing ethical concerns and unforeseen risks. As a result, companies need rigorous assessment and ongoing legal oversight to ensure that integrating public AI models aligns with both current laws and ethical standards, protecting them from costly legal challenges and reputational damage.

Jonathon Wright : AI-Augmented Testing: “How Generative AI And Prompt Engineering Turn Testers Into Superheroes, Not Replace Them”

Workshop about Modern Performance Engineering: emphasized the critical role of performance testing for me. The session included real-world examples, best practices and common challenges. One of the key takeaways was learning how to enhance observability in performance results, providing tools and techniques for investigating these insights alter on, on our personal laptops.

Using AI Throughout the Software Development Lifecycle (SDLC): An important question raised by Florian Fieber: “Would you use only AI through every phase of the SDLC, from analysis to release, and release software without any human involvement?” Most would agree that AI alone is not enough for the final acceptance and release phases, where human oversight remains essential. The final decision to release a product involves understanding real-world user impact, risk assessment, and long-term business consequences. Humans bring judgment that considers customer needs, brand reputation, and ethical concerns, factors that go beyond what AI can evaluate.

“AI will not replace you; a person using AI will.” This idea was shared at a recent conference we attended. It emphasizes that AI is not a replacement for human testers but a tool that enhances their abilities. Testers who leverage AI tools effectively can process data faster, identify patterns, and focus on more complex tasks while AI handles routine testing. By adopting AI strategically, testers can gain a competitive edge in the field.

To end this article with a bang! here is a trick for you: if you want that real-time ChatGPT response, just slip in a small tip (under $200) in your prompt. But let’s hope those tips won’t get deducted from our salaries by 2035!

Screenshot from ChatGPT